Improving Earthquake Monitoring with Deep Learning

Release Date: MARCH 12, 2021

On January 20, 2021 at 8:32am light shaking interrupted breakfast customers at a local coffee shop south of downtown Los Angeles, California. Everyone paused briefly while they waited to see if it was going to stop… or start shaking harder.

A few mobile phones pinged a couple of minutes later with the information that a magnitude 3.5 (M3.5) earthquake a few miles away in Inglewood was what they had felt. Ideally, earthquake information would always arrive so quickly, but that’s not always the case. And information about moderate to large earthquakes in some locations can take 15-20 minutes to arrive in the hands of those who want or need it. Larger earthquakes mean more data from more seismic stations, and that means a longer processing time for the monitoring system and the seismologist reviewing it.

Only a few decades ago, this same information would’ve taken several days to determine. Drive to the seismic station, retrieve the photographic film, bring it back to the lab, process it. With several earthquake recordings in hand and a ruler, measure the height of the wiggles and the time between the wiggles recorded at each station, and then triangulate on a location and estimate a magnitude. Today’s computer and communication technologies have mostly automated this process and made it much faster. Even so, seismologists manually review automated earthquake locations and magnitudes to make corrections when warranted because sometimes computer programs make mistakes. In a recent study, Yeck’s research team explored deep learning as a way to improve the automated earthquake monitoring program used by the National Earthquake Information Center (NEIC) so that there would be fewer mistakes and ultimately reduce the need for manual reviews.

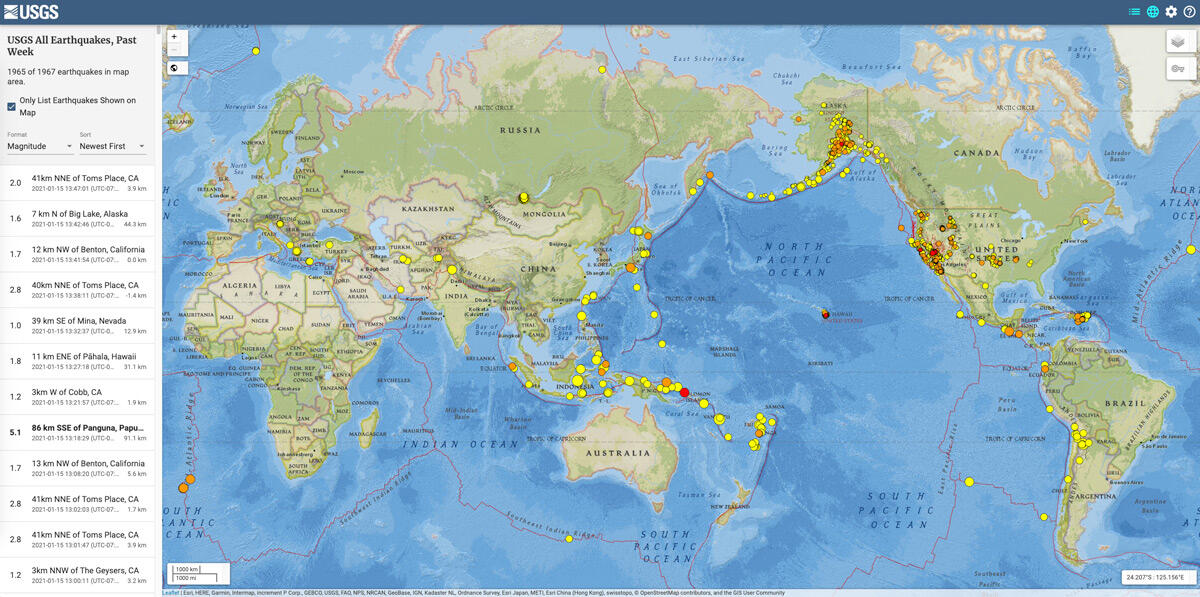

The NEIC processes M2.5+ earthquakes in the U.S. and M4.5+ earthquakes across the globe. This amounts to about 30,000 earthquakes each year. NEIC’s automated processes continuously monitor signals from seismic stations around the world to detect changes that look different than the background noise using what is called a short-term average/long-term average (STA/LTA) detector. The system tags the detection times of seismic wave arrivals at each station and tries to associate the detections from individual stations into a single dataset for a possible earthquake. The system then identifies the type of seismic wave, determines the location of the earthquake, and estimates the earthquake’s magnitude. Seismologists validate the automated arrival times to make corrections and adjustments to improve the accuracy of the earthquake location and magnitude before the earthquake information is distributed to mobile phones, sent to email addresses, and published to the USGS Earthquake Hazards Program website.

One of the most common problems with this type of system is unwanted associations. STA/LTA detectors don’t just detect earthquakes; they also detect other signals generated by things such as wind, cars, trains, and other sources of seismic vibrations. The association process can combine these non-seismic signals into false events. In addition, poor estimates of seismic wave arrivals can result in poor earthquake locations. A seismologist must then spend a fair amount of time cleaning up the false events and poor arrival times. Reducing the amount of human intervention needed could potentially shave minutes off the time between the earthquake happening and the earthquake being reported.

The NEIC monitoring system is a perfect candidate for deep learning because it processes large amounts of data, and that’s where deep learning excels. What is deep learning? In the most general terms, deep learning is a computer process that can take in large amounts of data and learn from it. This exercise is referred to as “training”, and the outcome is a model that contains useful information. A model is a mathematical approximation of a real thing that can be used to represent that thing in computations.

Yeck and his research team sought to find out if a deep-learning approach could perform better than NEIC’s traditional STA/LTA data processing methods to get more accurate earthquake locations and magnitudes. Instead of throwing out the current STA/LTA system and replacing it with a fully deep-learning-based system, the researchers set out to see if the information from deep-learning models could be fed back into the conventional STA/LTA system as additional data that might improve the processing results. NEIC researchers trained a machine-learning algorithm using a dataset from of about 1.3 million seismic wave arrival times for 136,716 earthquakes that occurred during 2013 to 2019. Three different models were created from the data, including: 1) the seismic wave arrival times, 2) the type of seismic signal (P-wave, S-wave, or noise), and 3) the distance between the earthquake and the seismic station.

The model results were compared to a test dataset of cataloged earthquakes considered to be the “ground-truth” in order to evaluate the performance of the models. The results gave a mean error of 0.57 seconds for the pick arrival times, 92.8% accuracy for the signal types, and 82.4% accuracy on the earthquake to station distances. The models were almost as good as the automatic results that had been checked and adjusted by seismologists!

Next, the scientists tested the three models by incorporating them into a copy of the NEIC processing system running in parallel with NEIC’s operational system. The test system produced fewer unwanted associations, detected a similar but slightly higher number of earthquakes overall, and calculated more accurate earthquake locations and magnitudes. Altogether this means the system using deep-learning data resulted in far fewer errors for a seismologist to spend time fixing, which means the earthquakes could be reported faster. Success!

One of the challenges of using deep learning for global earthquake monitoring is that very large earthquakes occur relatively infrequently and therefore are underrepresented in the training data. That means these models work best for the more common smaller events, while traditional detection methods may be best for larger, rare, events. The USGS scientists plan to explore ways to incorporate more deep-learning models into NEIC’s automatic earthquake processing system to address the infrequent large events and make further improvements in the future. Maybe some day all earthquake information, regardless of location or magnitude, will be available in only a few minutes, or even seconds, after the shaking stops.

- written by Lisa Wald, USGS, March 12, 2020

For More Information:

- National Earthquake Information Center (NEIC)

- Latest Earthquakes

- William Luther Yeck, John M. Patton, Zachary E. Ross, Gavin P. Hayes, Michelle R. Guy, Nick B. Ambruz, David R. Shelly, Harley M. Benz, Paul S. Earle; Leveraging Deep Learning in Global 24/7 Real‐Time Earthquake Monitoring at the National Earthquake Information Center. Seismological Research Letters 2020; 92 (1): 469–480. doi: https://doi.org/10.1785/0220200178

The Scientists Behind the Science

The first author on the study summarized here, Will Yeck, is a research geophysicist at the USGS National Earthquake Information Center. His research is aimed at improving our ability to rapidly and accurately characterize earthquakes as well as interpret the seismotectonics of significant events. He has led the development of many core NEIC earthquake processing elements. In his free time, Will likes to trail-run, climb, and read.

Release Date: MARCH 12, 2021

On January 20, 2021 at 8:32am light shaking interrupted breakfast customers at a local coffee shop south of downtown Los Angeles, California. Everyone paused briefly while they waited to see if it was going to stop… or start shaking harder.

A few mobile phones pinged a couple of minutes later with the information that a magnitude 3.5 (M3.5) earthquake a few miles away in Inglewood was what they had felt. Ideally, earthquake information would always arrive so quickly, but that’s not always the case. And information about moderate to large earthquakes in some locations can take 15-20 minutes to arrive in the hands of those who want or need it. Larger earthquakes mean more data from more seismic stations, and that means a longer processing time for the monitoring system and the seismologist reviewing it.

Only a few decades ago, this same information would’ve taken several days to determine. Drive to the seismic station, retrieve the photographic film, bring it back to the lab, process it. With several earthquake recordings in hand and a ruler, measure the height of the wiggles and the time between the wiggles recorded at each station, and then triangulate on a location and estimate a magnitude. Today’s computer and communication technologies have mostly automated this process and made it much faster. Even so, seismologists manually review automated earthquake locations and magnitudes to make corrections when warranted because sometimes computer programs make mistakes. In a recent study, Yeck’s research team explored deep learning as a way to improve the automated earthquake monitoring program used by the National Earthquake Information Center (NEIC) so that there would be fewer mistakes and ultimately reduce the need for manual reviews.

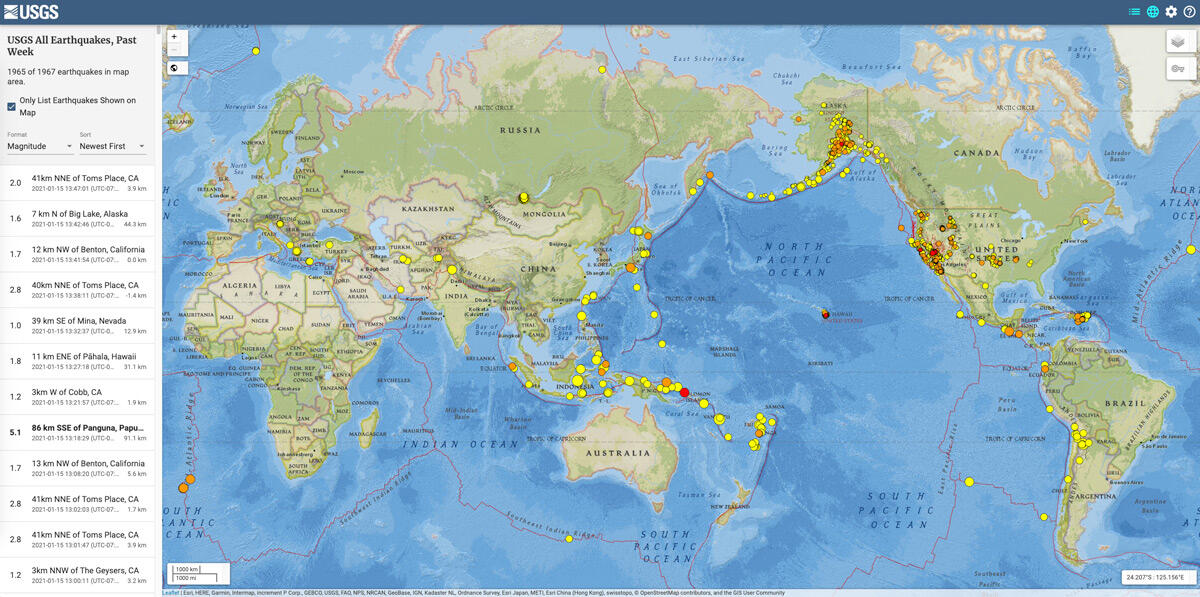

The NEIC processes M2.5+ earthquakes in the U.S. and M4.5+ earthquakes across the globe. This amounts to about 30,000 earthquakes each year. NEIC’s automated processes continuously monitor signals from seismic stations around the world to detect changes that look different than the background noise using what is called a short-term average/long-term average (STA/LTA) detector. The system tags the detection times of seismic wave arrivals at each station and tries to associate the detections from individual stations into a single dataset for a possible earthquake. The system then identifies the type of seismic wave, determines the location of the earthquake, and estimates the earthquake’s magnitude. Seismologists validate the automated arrival times to make corrections and adjustments to improve the accuracy of the earthquake location and magnitude before the earthquake information is distributed to mobile phones, sent to email addresses, and published to the USGS Earthquake Hazards Program website.

One of the most common problems with this type of system is unwanted associations. STA/LTA detectors don’t just detect earthquakes; they also detect other signals generated by things such as wind, cars, trains, and other sources of seismic vibrations. The association process can combine these non-seismic signals into false events. In addition, poor estimates of seismic wave arrivals can result in poor earthquake locations. A seismologist must then spend a fair amount of time cleaning up the false events and poor arrival times. Reducing the amount of human intervention needed could potentially shave minutes off the time between the earthquake happening and the earthquake being reported.

The NEIC monitoring system is a perfect candidate for deep learning because it processes large amounts of data, and that’s where deep learning excels. What is deep learning? In the most general terms, deep learning is a computer process that can take in large amounts of data and learn from it. This exercise is referred to as “training”, and the outcome is a model that contains useful information. A model is a mathematical approximation of a real thing that can be used to represent that thing in computations.

Yeck and his research team sought to find out if a deep-learning approach could perform better than NEIC’s traditional STA/LTA data processing methods to get more accurate earthquake locations and magnitudes. Instead of throwing out the current STA/LTA system and replacing it with a fully deep-learning-based system, the researchers set out to see if the information from deep-learning models could be fed back into the conventional STA/LTA system as additional data that might improve the processing results. NEIC researchers trained a machine-learning algorithm using a dataset from of about 1.3 million seismic wave arrival times for 136,716 earthquakes that occurred during 2013 to 2019. Three different models were created from the data, including: 1) the seismic wave arrival times, 2) the type of seismic signal (P-wave, S-wave, or noise), and 3) the distance between the earthquake and the seismic station.

The model results were compared to a test dataset of cataloged earthquakes considered to be the “ground-truth” in order to evaluate the performance of the models. The results gave a mean error of 0.57 seconds for the pick arrival times, 92.8% accuracy for the signal types, and 82.4% accuracy on the earthquake to station distances. The models were almost as good as the automatic results that had been checked and adjusted by seismologists!

Next, the scientists tested the three models by incorporating them into a copy of the NEIC processing system running in parallel with NEIC’s operational system. The test system produced fewer unwanted associations, detected a similar but slightly higher number of earthquakes overall, and calculated more accurate earthquake locations and magnitudes. Altogether this means the system using deep-learning data resulted in far fewer errors for a seismologist to spend time fixing, which means the earthquakes could be reported faster. Success!

One of the challenges of using deep learning for global earthquake monitoring is that very large earthquakes occur relatively infrequently and therefore are underrepresented in the training data. That means these models work best for the more common smaller events, while traditional detection methods may be best for larger, rare, events. The USGS scientists plan to explore ways to incorporate more deep-learning models into NEIC’s automatic earthquake processing system to address the infrequent large events and make further improvements in the future. Maybe some day all earthquake information, regardless of location or magnitude, will be available in only a few minutes, or even seconds, after the shaking stops.

- written by Lisa Wald, USGS, March 12, 2020

For More Information:

- National Earthquake Information Center (NEIC)

- Latest Earthquakes

- William Luther Yeck, John M. Patton, Zachary E. Ross, Gavin P. Hayes, Michelle R. Guy, Nick B. Ambruz, David R. Shelly, Harley M. Benz, Paul S. Earle; Leveraging Deep Learning in Global 24/7 Real‐Time Earthquake Monitoring at the National Earthquake Information Center. Seismological Research Letters 2020; 92 (1): 469–480. doi: https://doi.org/10.1785/0220200178

The Scientists Behind the Science

The first author on the study summarized here, Will Yeck, is a research geophysicist at the USGS National Earthquake Information Center. His research is aimed at improving our ability to rapidly and accurately characterize earthquakes as well as interpret the seismotectonics of significant events. He has led the development of many core NEIC earthquake processing elements. In his free time, Will likes to trail-run, climb, and read.